Statistical Graphics vs. InfoVis

The current issue of the Statistical Computing and Graphics Newsletter features two invited articles, which both look at the “graphical display of quantitative data” – one from the perspective of statistical graphics, and one from the perspective of information visualization.

Robert Kosara writes from an InfoVis view:

Visualization: It’s More than Pictures!

Information visualization is a field that has had trouble defining its boundaries, and that consequently is often misunderstood. It doesn’t help that InfoVis, as it is also known, produces pretty pictures that people like to look at and link to or send around. But InfoVis is more than pretty pictures, and it is more than statistical graphics.

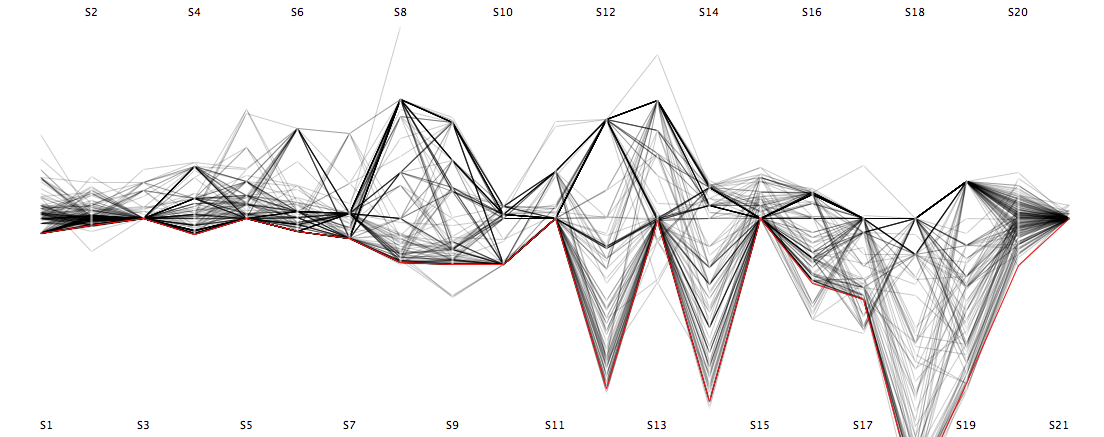

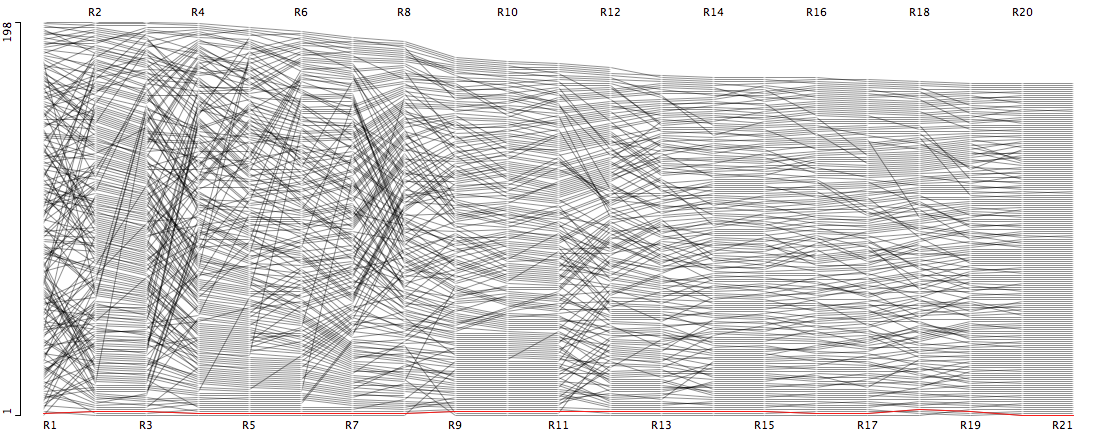

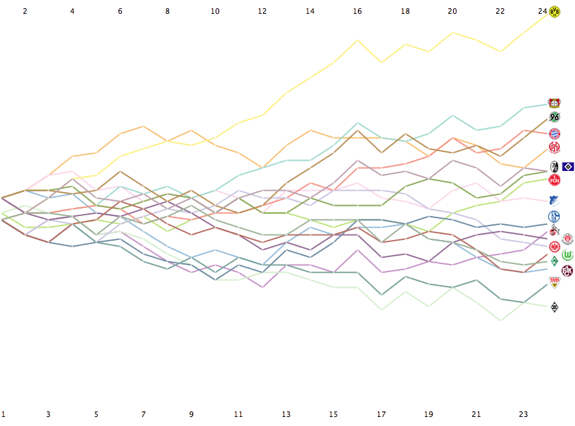

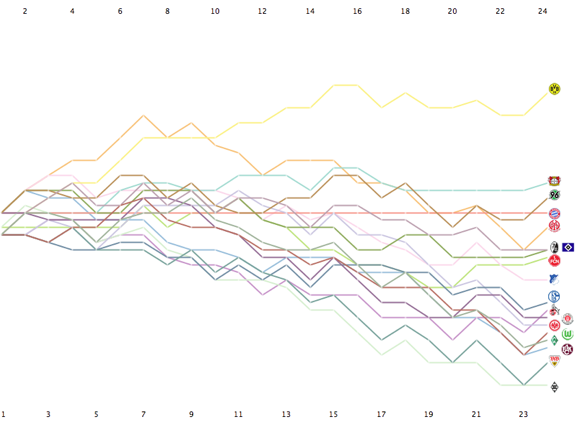

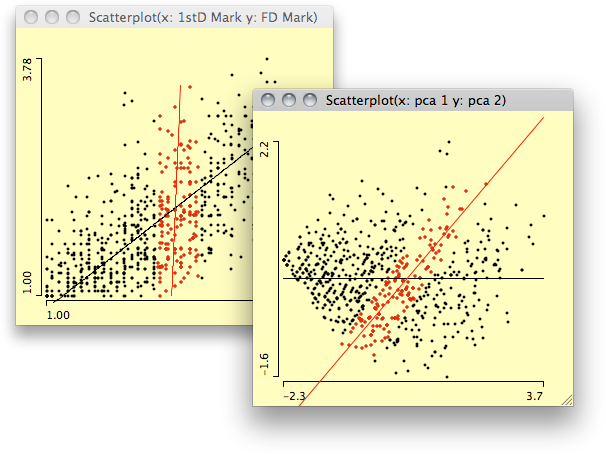

The key to understanding InfoVis is to ignore the images for a moment and focus on the part that is often lost: interaction. When we use visualiza- tion tools, we don’t just create one image or one kind of visualization. In fact, most people would argue that there is not just one perfect visualization configuration that will answer a question [4]. The process of examining data requires trying out different visualization techniques, …

Andrew Gelman and Antony Unwin write from an statistical graphics view:

Visualization, Graphics, and Statistics

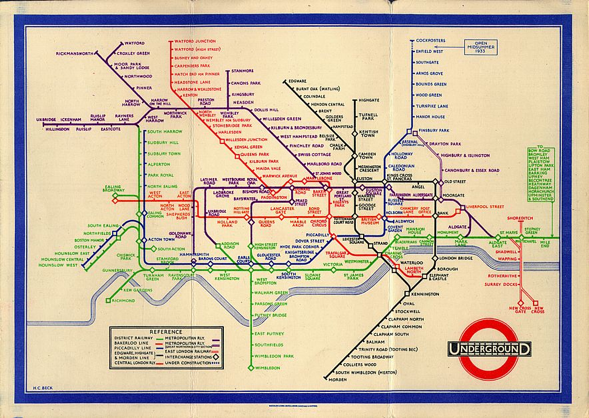

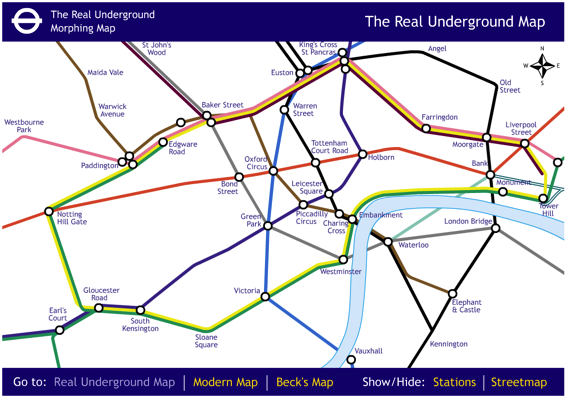

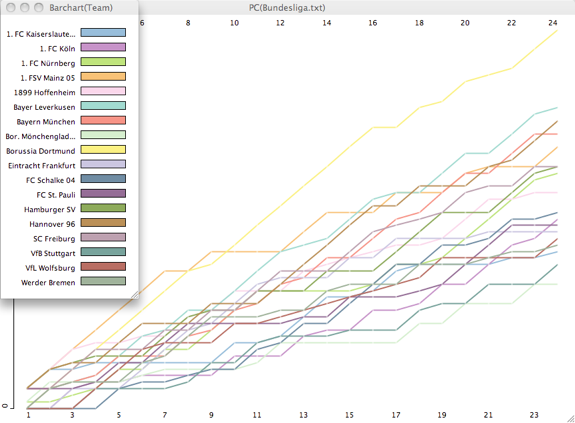

Quantitative graphics, like statistics itself, is a young and immature field. Methods as fundamental as histograms and scatterplots are common now, but that was not always the case. More recent developments like parallel coordinate plots are still establishing themselves. Within academic statistics (and statistically-inclined applied fields such as economics, sociology, and epidemiology), graphical methods tend to be seen as diversions from more “serious” analytical techniques. Statistics journals rarely cover graphical methods, and Howard Wainer has reported that, even in the Journal of Computational and Graphical Statistics, 80% of the articles are about computation, only 20% about graphics.

Outside of statistics, though, infographics and data visualization are more important. Graphics give a sense of the size of big numbers, …

You will be surprised about the amount of consensus, as well as the topics of dispute – both might probably not match your expectation, but can be a start of an open discussion.

This blog post shall be the platform for this discussion and we are looking forward to reading your comments …

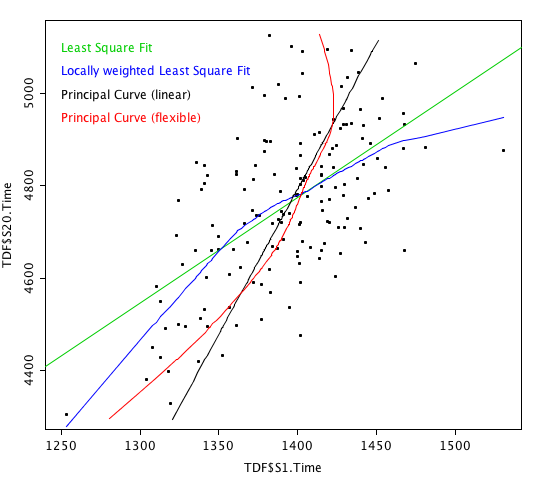

The last lowlight to present here is the “histogram” in Figure 9.4 on page 202, which is – hey – just a scatterplot; they are not that hard to tell apart.

The last lowlight to present here is the “histogram” in Figure 9.4 on page 202, which is – hey – just a scatterplot; they are not that hard to tell apart.

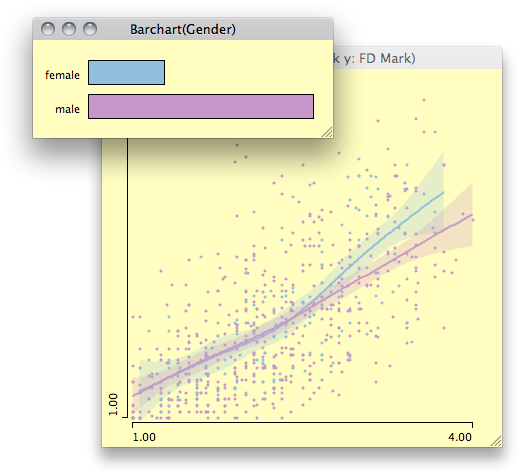

The plot shows the results of the 1st and the last time trial of the Tour de France in 2005. Depending on the type of rider we might expect the one or the other correlation between the two dimensions, and it is not too obvious, how the times should depend on each other.

The plot shows the results of the 1st and the last time trial of the Tour de France in 2005. Depending on the type of rider we might expect the one or the other correlation between the two dimensions, and it is not too obvious, how the times should depend on each other.