There is an impressive 54 minute documentary on “visualization in the media” at datajournalism.stanford.edu.

The site also has quite a bit additional material literally around the video. The story seems to be tailored around (or at least crosses it every now and then) the paper by Segel and Heer.

Here are some significant quotes (with some picky comments from myself)

_______________ I Introduction _______________

“We are interested in democratizing visualization“

“We are interested in democratizing visualization“

I am not sure what that really means. Giving access to a very limited set of visualizations might generate 99% chart junk and a few good things – whom did it help in the end?

“The best way for people to learn about visualizations is to make them”

That is certainly the case for us experts, but don’t we need basic training? You won’t tell a stats 101 student “the best way to learn about statistical models is to create them; download R and be happy!”, would you?

“This is looking at air traffic over North America”

“This is looking at air traffic over North America”

Hey, come on. This one is such an old hat (see here). Of course we have faster computers and better rendering machines, but what is the conceptual contribution in the year 2010?

_______________ II Data Vis in Journalism _______________

“… what visualization is helpful for is putting data into context”

“… what visualization is helpful for is putting data into context”

Nothing to add here.

“… how do you get a story from the data?”

“… how do you get a story from the data?”

That should be an easy one for the media, as they start with the story.

“… look very nice, and are almost completely incomprehensible.”

It is indeed very tempting to create very pretty pictures, where the practical value (i.e., the interpretation from the visualization without knowing the story beforehand is very limited)

_______________ III Telling “Data Stories” _______________

“Here is the data, and you can play with it as you want…”

“Here is the data, and you can play with it as you want…”

This is a dangerous one. There are certainly visualizations where the reader might positively use some degrees of freedom to manipulate and explore, but too many examples show that the reader is just left alone poking in a pile of data.

_______________ IV A New Era in Infographics _______________

“Unfortunately Infographics is something that is dominated by fashion …”

“Unfortunately Infographics is something that is dominated by fashion …”

Well, to be a good data visualizer, you need to be some sort of artist – as beauty helps. With artists’ attitude, there comes vanity and fashion. As long as this happens for people rooted in a quantitative education, I think we are safe – if not …

“… some of the people doing it like rock stars!”

“… some of the people doing it like rock stars!”

That sounds similar as to the fashion trends, but I think is is even worse. Rock stars aim at publicity – with all means.

_______________ V Life as a Data Stream _______________

“… in a future we are heading to, there are sensors everywhere …”

“… in a future we are heading to, there are sensors everywhere …”

I am not looking forward to being part of this future …

_______________ VI Exploring Data _______________

“The key part of exploratory data analysis for many instances is being able to rapidly iterate …”

“The key part of exploratory data analysis for many instances is being able to rapidly iterate …”

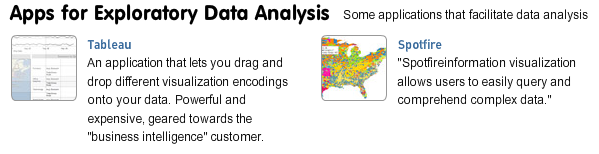

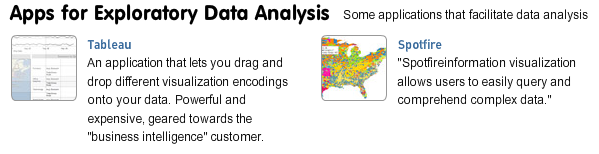

This is where the toolkits separate from “closed”, general purpose tools. With a toolkit you are still very close to programming – and that takes time. More “complete” tools may be able to get you up and running far faster, though with limitations.

_______________ VII Technologies and Tools _______________

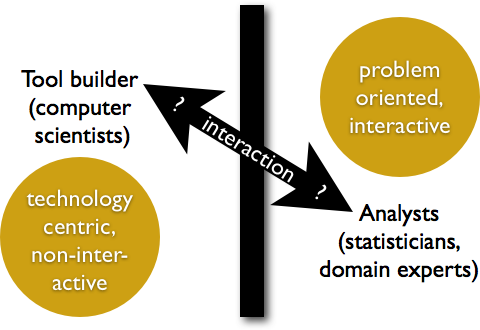

“… in many ways of how much a software development shop we’ve become …”

This is a crucial point. Often the creativity and the technical skills won’t meet in one person. But this is a common problem for all types of software and often culminates in the problems of synchronizing the developer’s and user’s views.

In the end, I am not surprised that the tools for Exploratory Data Analysis (Apps for EDA) listed are all from the computer science based InfoVis domain, and nothing from statistics. Needless to mention that Tableau is based on Lee Wilkinson’s book Grammar of Graphics:

(One question is left though: what makes Martin Wattenberg smile so persistently?)

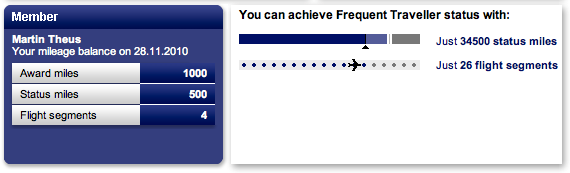

According to the numbers, I need 36.000 miles resp. 30 flight segments to get Frequent Traveller status. Given my currently 1.500 miles resp. 4 segments, I am still 96% resp. 87% short to get this status.

According to the numbers, I need 36.000 miles resp. 30 flight segments to get Frequent Traveller status. Given my currently 1.500 miles resp. 4 segments, I am still 96% resp. 87% short to get this status.

“We are interested in democratizing visualization“

“We are interested in democratizing visualization“

“This is looking at air traffic over North America”

“This is looking at air traffic over North America” “… what visualization is helpful for is putting data into context”

“… what visualization is helpful for is putting data into context” “… how do you get a story from the data?”

“… how do you get a story from the data?”

“Here is the data, and you can play with it as you want…”

“Here is the data, and you can play with it as you want…” “Unfortunately Infographics is something that is dominated by fashion …”

“Unfortunately Infographics is something that is dominated by fashion …” “… some of the people doing it like rock stars!”

“… some of the people doing it like rock stars!” “… in a future we are heading to, there are sensors everywhere …”

“… in a future we are heading to, there are sensors everywhere …”

The two major problems with the passport which I remember from Faber’s talk are:

The two major problems with the passport which I remember from Faber’s talk are: