(Some) Truth about Big Data

I read the President’s Corner of the last ASA Newsletter by Bob Rodrigez the other day and had some flashback to times when statistics met Data Mining in the late 90s. Daryl Pregibon – who happened to be my boss at that time – put forth a definition of data mining as “statistics at scale and speed“. This may only be one way to look at it, but it shows that there is certainly a strong link to what we did in statistics for a long time, and the essential difference may be more along the technological lines regarding data storage and processing. Bob does phrase exactly the same thing for Big Data 15 years later, when he says “statisticians must be prepared for a different hardware and software infrastructure“.

Whereas the inventors and promotors of the new buzz word Big Data even create a new profession, called “Data Scientist”, they are largely lacking ideas which can describe their conceptual activities (at least ones, which we didn’t use in statistics for decades …).

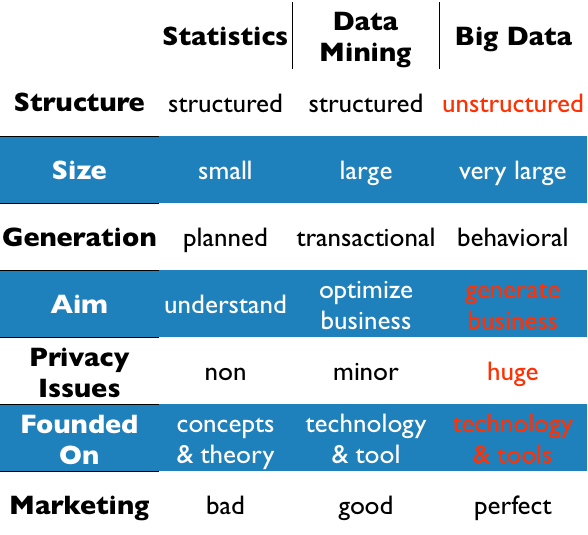

Let me try to put Big Data into perspective by contrasting it to statistics and Data Mining:

Whereas statistics and data mining usually deal with fairly well structured data, big data is usually more or less unstructured. Classical statistical procedures were based on (small) planned samples and data mining input was usually derived from (large) transactional sources or remote sensing. Big data goes even further, i.e., collects data at points where we don’t expect someone to be listening, and stores it in immense arrays of data storage (some people call it “the cloud”). Be it the location via mobile phones, visited websites via cookies or “likes”, or posts in the so called social web – someone is recording that data and looks for the next best opportunity to sell it; whether we agree to, or not.

The marketing aspect is even more important for Big Data than for it was for data mining. Quite similar to the US political campaigns, consulting companies like McKinsey publish papers like “Big data: The next frontier for innovation, competition, and productivity“, which tell us, that we are essentially doomed, if we do not react on the new challenge – and btw. they are ready to help us for just a few bucks …

What is missing though, are the analytical concepts to deal with Big Data – and to be honest, there is no way around good old statistics. Companies like SAS create high performance tools, that now can connect to Hadoop etc. and will compute good old logistic regression on billions of records in only a few minutes – the only question is, who would ever feel the need to, or even worse, trusts its results without further diagnostics?

[…] what happens when organizations and companies go haywire with our data, and the buzzword “big data” also called statisticians on the plan. There are limits that need to be respected; limits […]

The comparison has been made by a person who does not really know the statistics. Statistical we are trained to analyze small and large set of data, observational and experimental studies. Prepared in tteorias and use of new technology and tools.

[…] Download Image More @ http://www.theusrus.de […]

[…] Gambar 01. Perbedaan Statistika dan Data Mining (serta Big Data/Data Science). [Image Source] […]